Game Changing Development

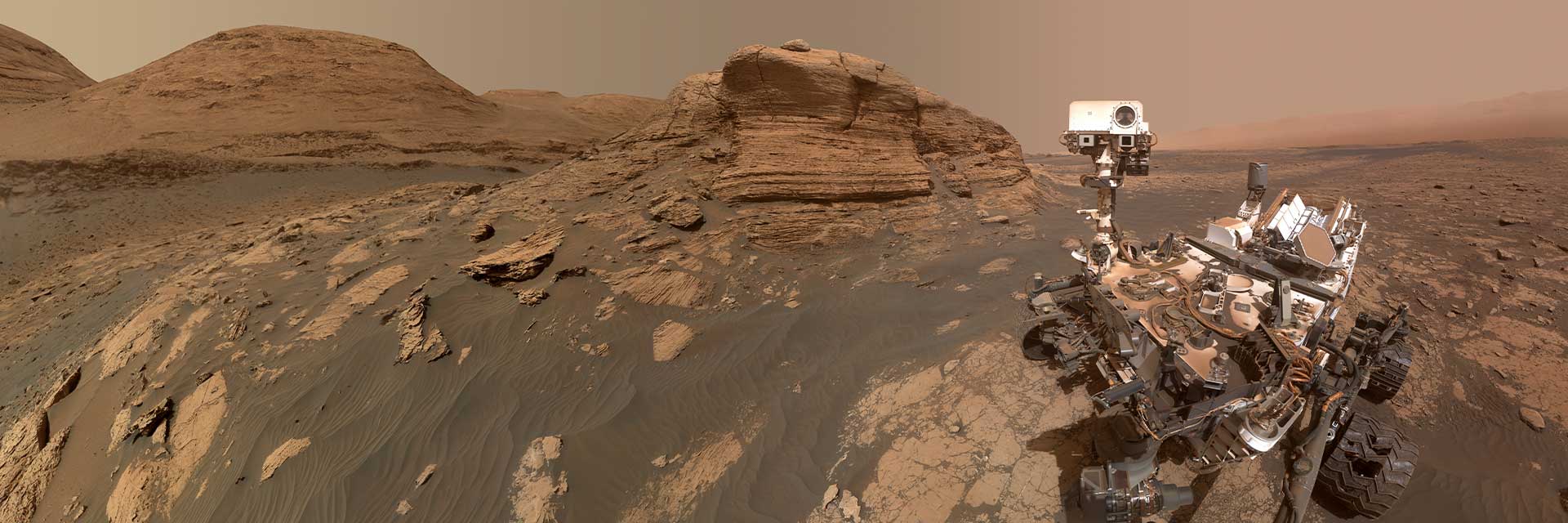

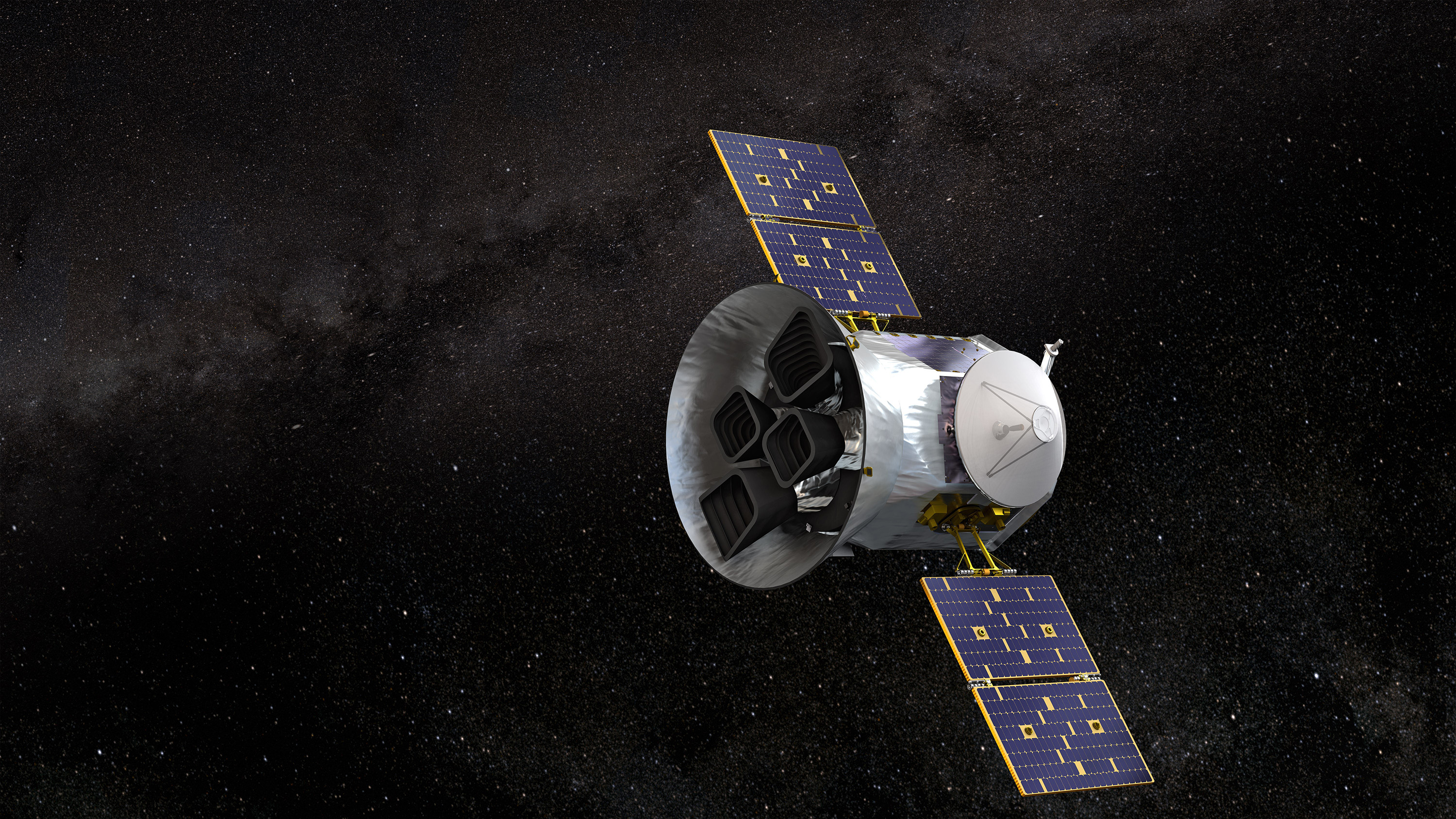

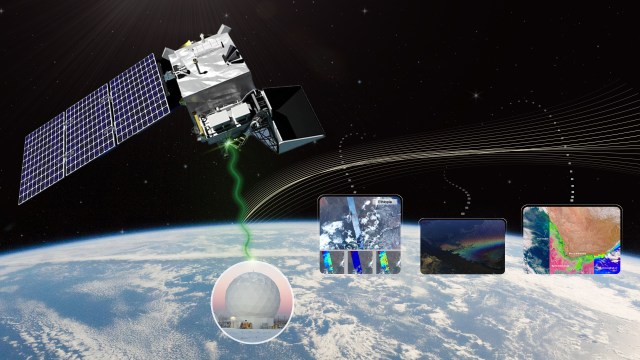

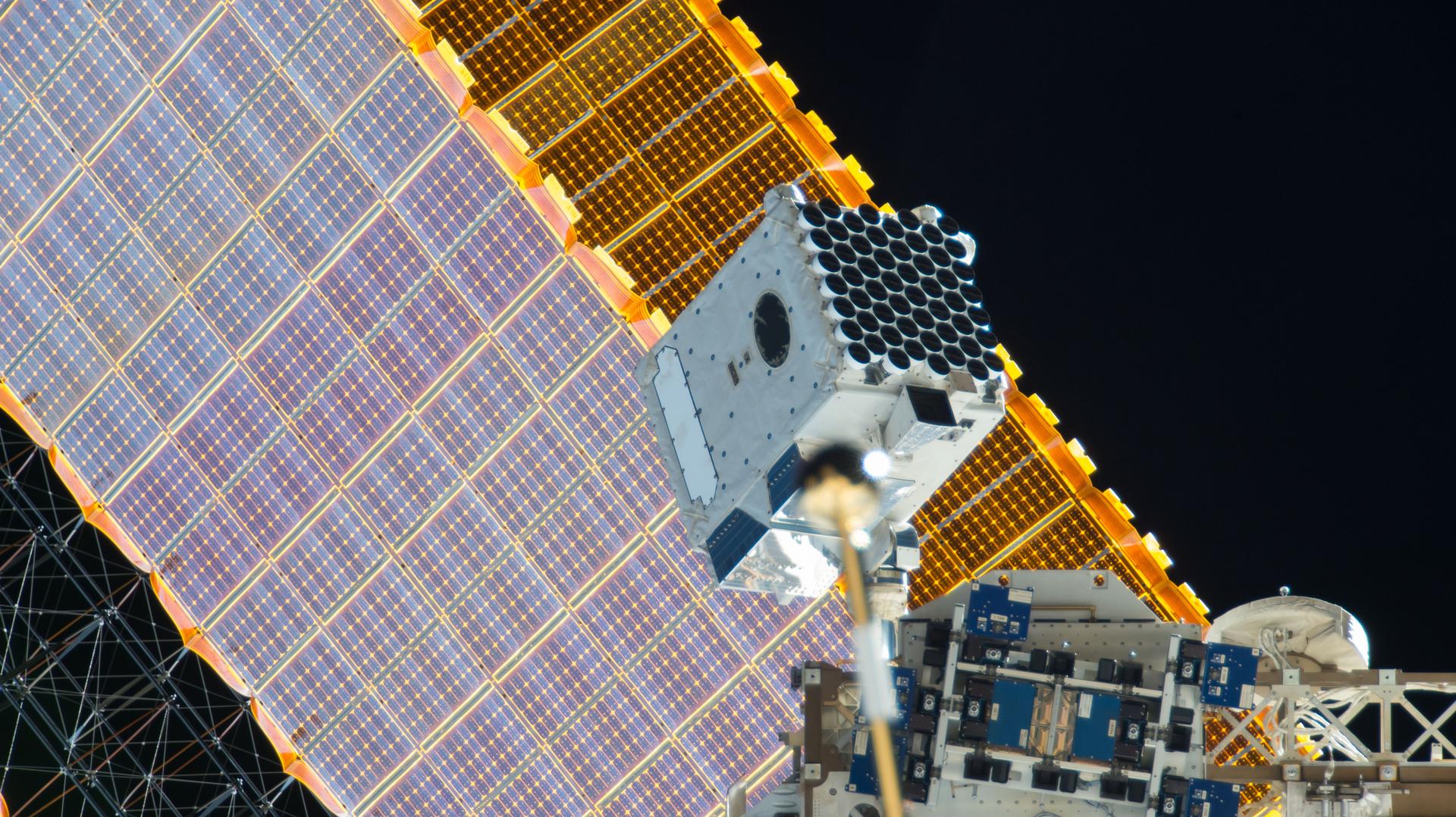

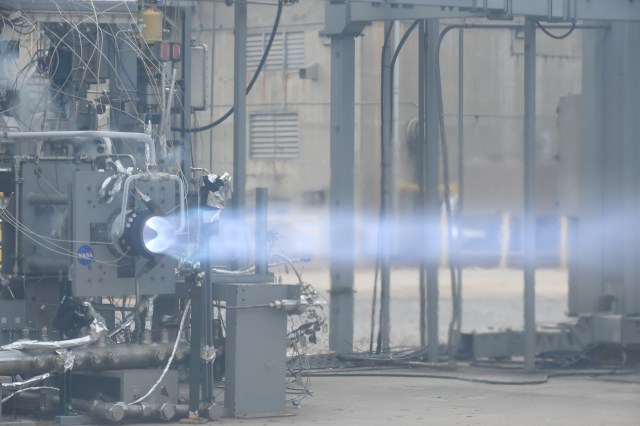

The Game Changing Development Program is a part of NASA’s Space Technology Mission Directorate (STMD). The Program advances space technologies that may lead to entirely new approaches for the Agency’s future space missions and provide solutions to significant national needs. The Program employs a balanced approach of guided technology development efforts and competitively selected efforts from across academia, industry, NASA, and other government agencies.

GCD Projects

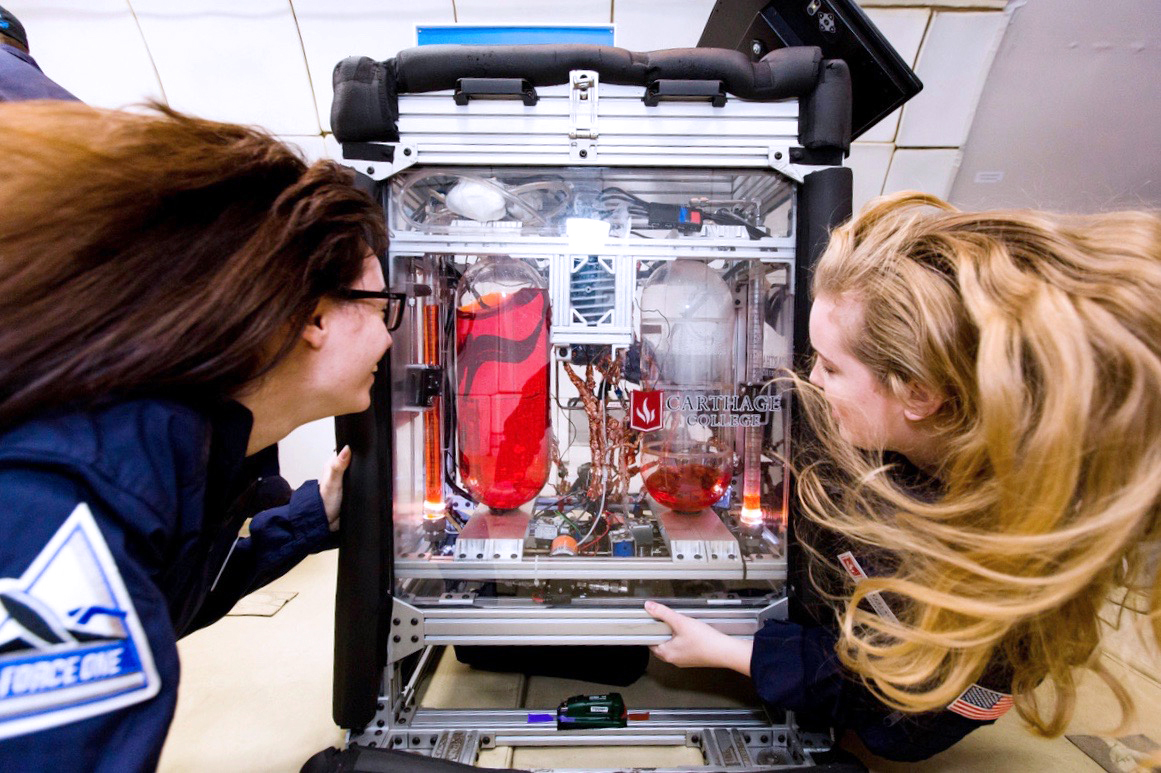

GCD collaborates with research and development teams to progress the most promising ideas through analytical modeling, ground-based testing and spaceflight demonstration of payloads and experiments.

Learn More about GCD ProjectsNASA's BIG Idea Challenge 2024

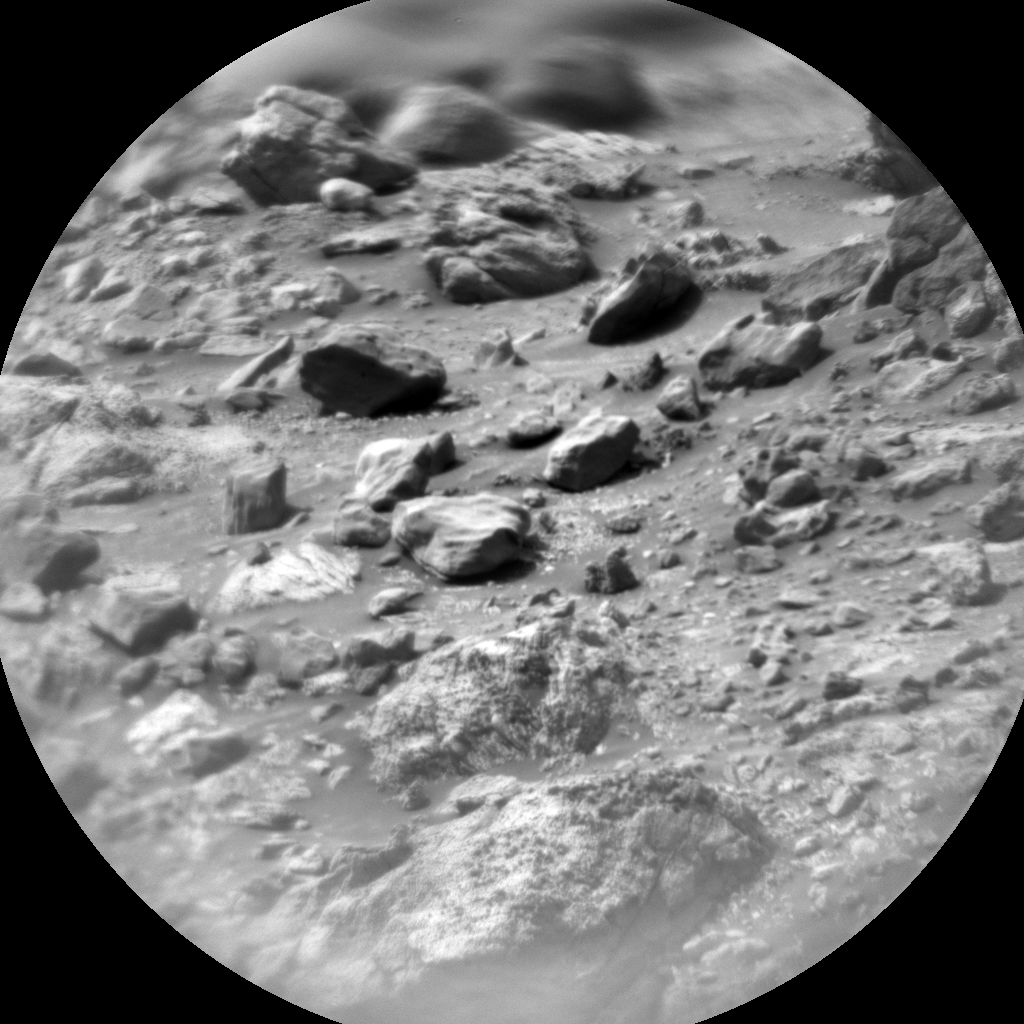

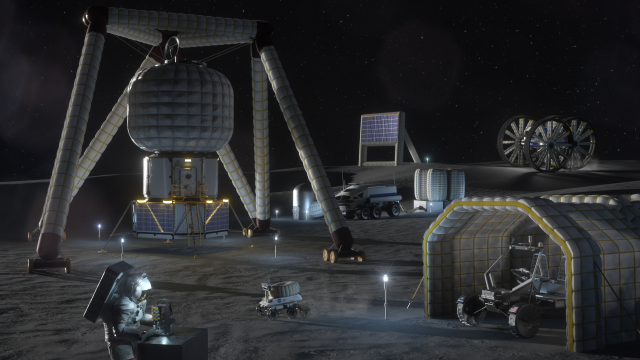

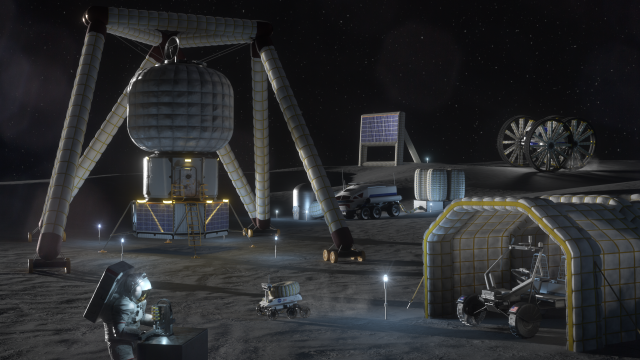

The Breakthrough, Innovative and Game-changing (BIG) Idea Challenge is an initiative supporting NASA’s Space Technology Mission Directorate’s (STMD’s) Game Changing Development Program’s (GCD) efforts to rapidly mature innovative and high-impact capabilities and technologies for infusion in a broad array of future NASA missions. BIG Idea 2024 invites teams to design, develop, and demonstrate novel uses of low Size, Weight and Power (SWaP) inflatable technologies, structures, and systems for lunar operations, exploring innovative concepts incorporating inflatable components.

Learn More

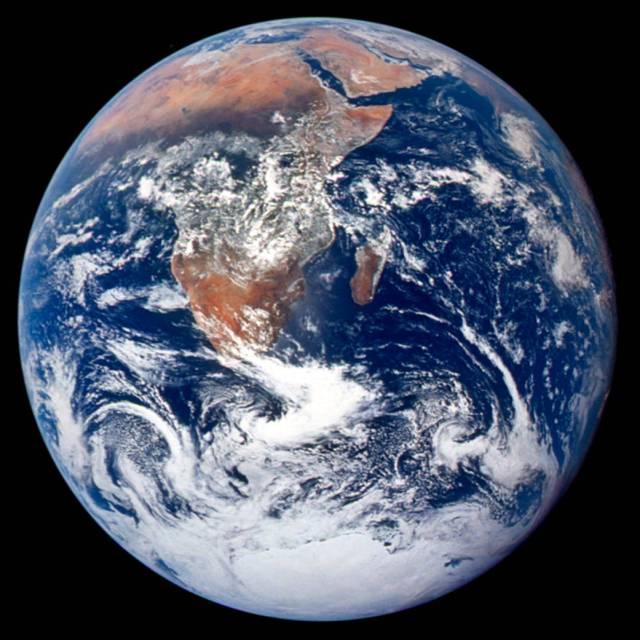

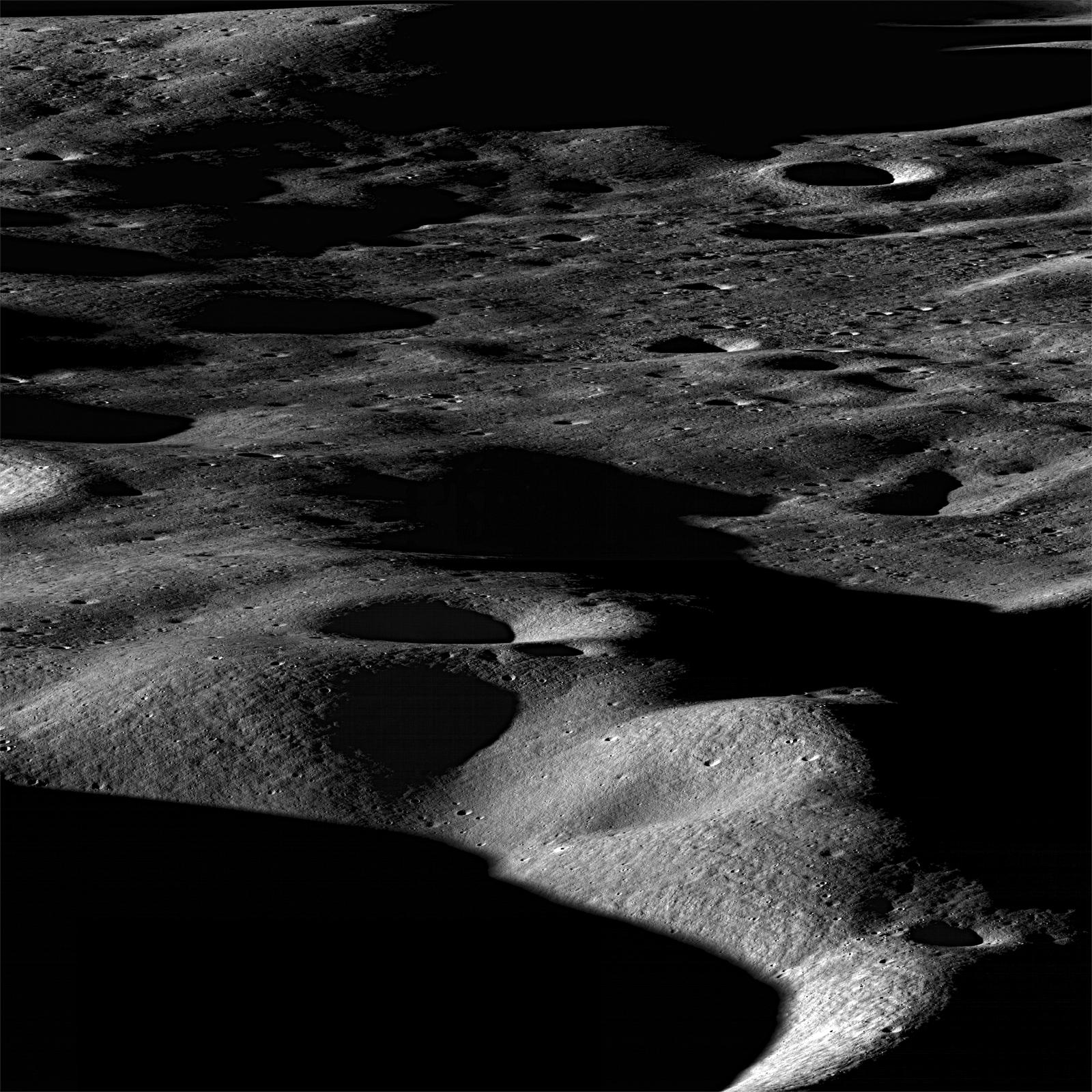

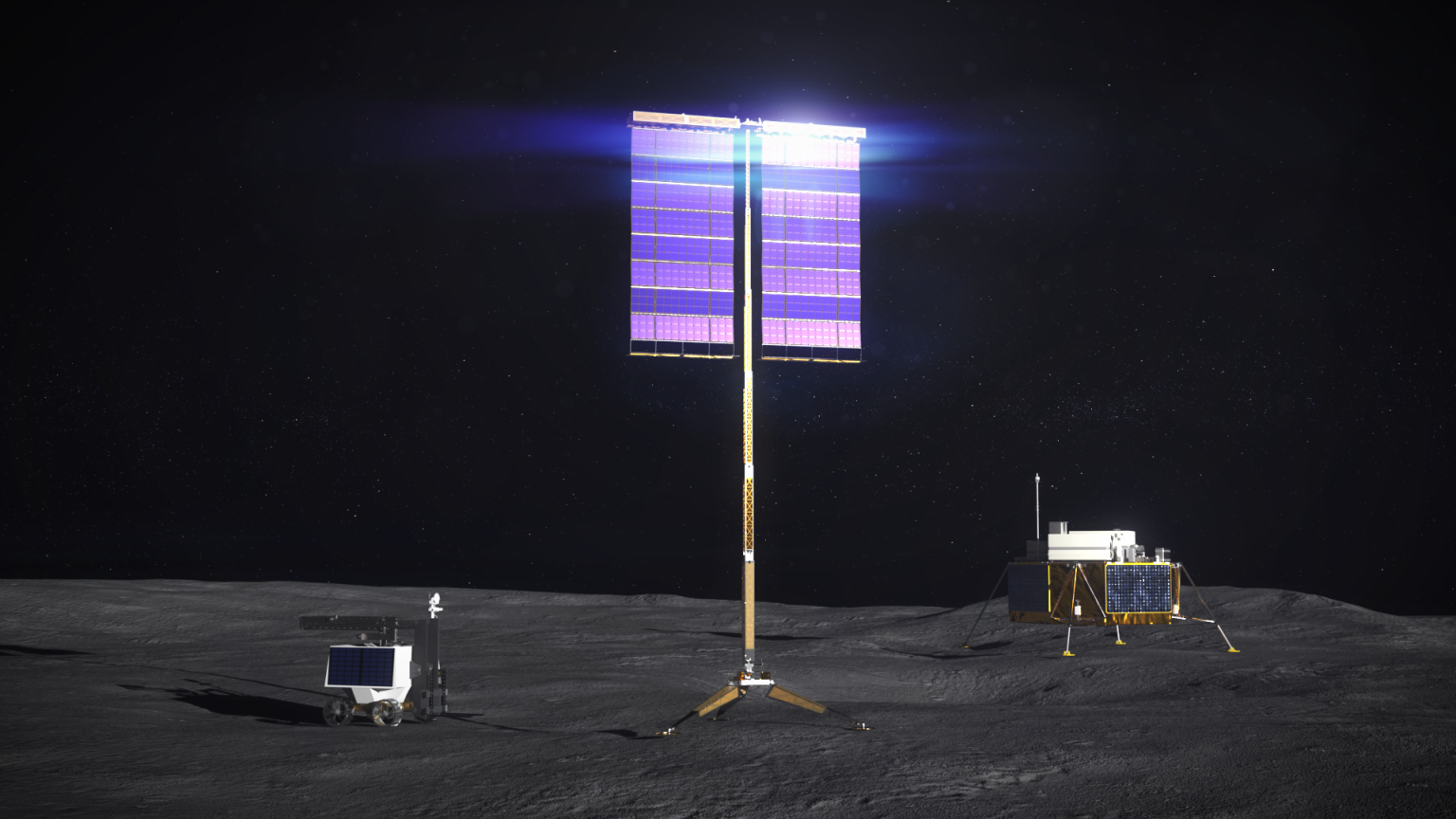

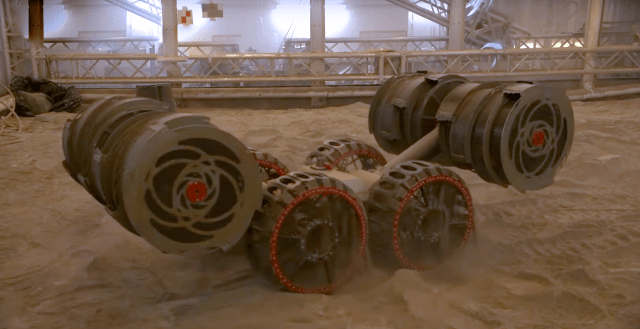

Lunar Surface Innovation Initiative

Part of NASA’s Space Technology Mission Directorate and Technology Maturation portfolio, LSII develops breakthrough technology, defining new approaches to pave the way for successful human and robotic missions on the surface of the Moon.

Learn More about Lunar Surface Innovation Initiative